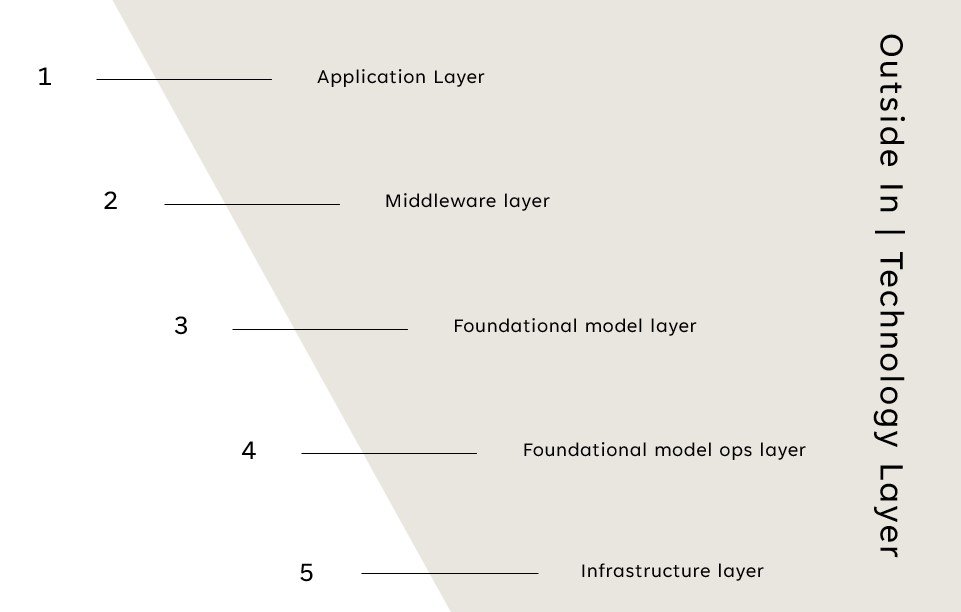

The Generative AI technology stack

Per Pitchbook, in 2022 globally, Generative AI received $4.7B of funding across 375 deals. In Q1 2023, it received $1.7B across 46 deals. As an investor and technologist closely watching this space, I tried digging into the MOAT of these Gen. AI companies that are mushrooming by the dozen every day. The curiosity led me to answer a simple question for myself.

How does the Gen. AI technology stack look like today?

Here is my simplified version of it going top down:

Like any other technology stack, it is a multi-tiered cake, including the foundational model layer that has gained significant attention in recent times, thanks to OpenAI.

- Sitting on the top is the application layer, which has become productivity staple for me over the last few months. This layer consists of the end-user applications that create engaging and personalized experiences for consumers. Companies solving real and meaningful problems will rule in this layer. Few names offering impressive services in this area today include Jasper, Kore, Harvey, Tome, and Runaway.

- The second is the middleware layer that enables businesses to build foundational model applications more quickly by allowing developers to build impactful products on top of the likes of Open AI’s GPT-4. Platform in this space viz. LangChain and HumanLoop help enterprises go faster from prototype to production application, evaluate LLMs and constantly improve them by incorporating company data and user feedback. I believe companies operating in this layer have the biggest MOAT. They are embedded in the developers’ workflow, thereby making the switching costs high.

- The foundational model layer is the next layer that includes open and closed source large language models (LLMs). This layer is the heart of the Gen. AI stack where the algorithms sit. Few names offering impressive services in this area today include OpenAI’s GPT 4, Freenome, Amperity and Google’s PaLM.

- The fourth is the foundation model operations layer, which allows enterprises to optimize, train and run their models more efficiently. Modal and OctoML operate in this layer.

- Lastly, the infrastructure layer is the fifth layer, which includes the cloud and hardware infrastructure for easy-to-use AI packages that cater to different use cases and industries. Cloud providers such as AWS, Azure, IBM and Nvidia dominate this space.

With IBM, Microsoft, Google, AWS, Meta, and Baidu making their Gen. AI platform announcements over the last few months, I do expect their upcoming offerings will cut across most (if not all) layers. And that makes absolute sense since this Gen. AI space presents a significant enterprise opportunity reaching $98.1 B by 2026 growing at 32% CAGR per this Pitchbook report.

I will keep looking for the Gen. AI promising players and their MOAT as capabilities evolve. But hey did you notice Nvidia’s fleeting entry into the $1 T club last week? Evokes the gold rush, where the real fortunes were earned by the providers of the shovels, not the seekers of the gold.

I will continue to dig the future winners and their MOAT in this evolving Generative AI era. The fact remains that with Nvidia momentarily joined $1 T club in May’23 and this situation reminds me of the gold rush where the real winners were the companies that provided the shovels.

This blog only represents my personal views. They do not reflect the thoughts, intentions, plans or strategies of my clients or employer.